NVIDIA has officially released the successor of the GTX 480: the GeForce GTX 580. This card is powered by the GF110 GPU, which is a refresh of the GF100 GPU. For more detail about new things brought by the GF110, check this page out.

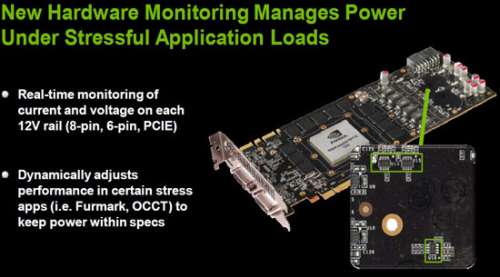

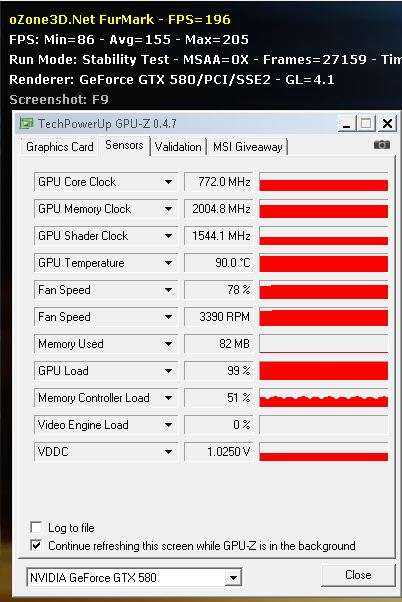

But the real new thing is somewhere else: the power draw is now under strict control. Like AMD with the Radeon HD 5000 series (see )ATI Cypress (Radeon HD 5870) Cards Have Hardware Protection Against Power Virus Like FurMark and OCCT), NVIDIA has added dedicated hardware to limit the power draw. And still like AMD with the Catalyst drivers (see FurMark Slowdown by Catalyst Graphics Drivers is INtentional!), there are somne optimizations in ForceWare R262.xx when FurMark (or OCCT) is detected (hehe, maybe the weak link???). In short, when FurMark is detected, the GTX 580 is throttled back by the power consumption monitoring chips. Now we have the explanation of this strange FurMark screenshot.

1 – GeForce GTX 580 specifications

- GPU: GF110 @ 772MHz / 40nm

- Shader cores: 512 @ 1544MHZ

- Memory: 1536MB GDDR5 @ 1002MHz real clock (or 4008MHz effective, see Graphics Cards Memory Speed Demystified for more details), 384-bit bus width

- Texture units: 64

- ROPs: 48

- TDP: 244 watts

- Power connectors: 6-pin + 8-pin

- Price: USD 500$

2 – GTX 580 Power Draw Monitoring

To shorten the story, NVIDIA uses a mix of hardware monitoring chips AND FurMark detection at the driver level to limit the power draw.

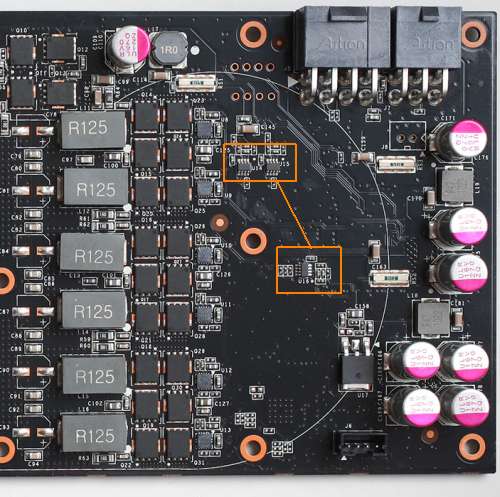

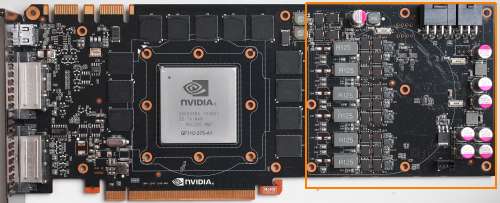

GTX 580 – Voltage and current monitoring chips, labelled U14, U15 and U16

From W1zzard / TPU:

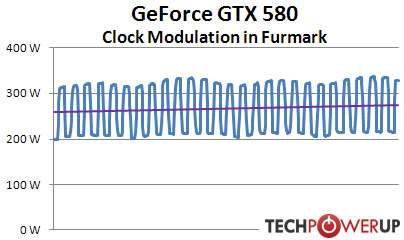

In order to stay within the 300 W power limit, NVIDIA has added a power draw limitation system to their card. When either Furmark or OCCT are detected running by the driver, three sensors measure the inrush current and voltage on all 12 V lines (PCI-E slot, 6-pin, 8-pin) to calculate power. As soon as the power draw exceeds a predefined limit, the card will automatically clock down and restore clocks as soon as the overcurrent situation has gone away. NVIDIA emphasizes this is to avoid damage to cards or motherboards from these stress testing applications and claims that in normal games and applications such an overload will not happen. At this time the limiter is only engaged when the driver detects Furmark / OCCT, it is not enabled during normal gaming. NVIDIA also explained that this is just a work in progress with more changes to come. From my own testing I can confirm that the limiter only engaged in Furmark and OCCT and not in other games I tested. I am still concerned that with heavy overclocking, especially on water and LN2 the limiter might engage, and reduce clocks which results in reduced performance. Real-time clock monitoring does not show the changed clocks, so besides the loss in performance it could be difficult to detect that state without additional testing equipment or software support.

I did some testing of this feature in Furmark and recorded card only power consumption over time. As you can see the blue line fluctuates heavily over time which also affects clocks and performance accordingly. Even though we see spikes over 300 W in the graph, the average (represented by the purple line) is clearly below 300 W. It also shows that the system is not flexible enough to adjust power consumption to hit exactly 300 W.

GeForce GTX 580 power draw under FurMark:

– 153 watts with the limiter (hw chip + driver)

– 304 watts without the limiter

Conclusion from W1zzard / TPU:

A feature that will certainly be discussed at length in forums is the new power draw limiting system. When the card senses it is overloaded by either Furmark or OCCT, the card will reduce clocks to keep power consumption within the board power limit of 300 W. Such a system seems justified to avoid damage to motherboard and VGA card and allows NVIDIA to design their product robustness with real loads in mind. NVIDIA stresses that this system is designed not to limit overclocking or voltage tuning and that they will continue making improvements to it. Right now I also see reviewers affected because many rely on Furmark for testing temperatures, noise, power and other things which will make the review production process a bit more complex too. For the every day gamer the power draw limiter will not have any effect on performance.

From Hexus

GTX 480 is one hot-running beastie. Give it some FurMark love and watch the watts spiral out of control, way above the rated 250W TDP, and hear the reference cooler’s fan run fast enough to sound like a turbine. The cooler’s deficiencies have been well-documented in the press. NVIDIA doesn’t like you running FurMark, mainly because it’s not indicative of real-world gameplay and causes the GPU to run out of specification. We like it because it makes high-end cards squeal!

So concerned is NVIDIA with the pathological nature of FurMark and other stress-testing apps, it is putting a stop to it by incorporating hardware-monitoring chips on the PCB. Their job is to ensure that the TDP of the card isn’t breached by such apps, and they do this by monitoring the load on each 12V rail.

Should a specific application hammer the GPU to the point where the power-draw is way past specification, as FurMark does to a GTX 480, the hardware chips will simply clock the card down. Pragmatically, running FurMark v1.8.2 on the GTX 580 results in half the frame-rate (and 75 per cent of the load) that we experience on a ‘480 with the same driver. The important point is that the power management is controlled by a combination of software driver and hardware monitoring chips.

NVIDIA goes about the power-management game sensibly, because the TDP cap only comes into play when the driver and chips determine that a stress-testing app is being used – currently limited to FurMark v1.8+ and OCCT – so users wishing to overclock the card and play real-world games are able to run past the TDP without the GPU throttling down. Should new thermal stress-testing apps be discovered, NVIDIA will invoke power capping for them with a driver update.

From AnandTech:

NVIDIA’s reasoning for this change doesn’t pull any punches: it’s to combat OCCT and FurMark. At an end-user level FurMark and OCCT really can be dangerous – even if they can’t break the card any longer, they can still cause other side-effects by drawing too much power from the PSU. As a result having this protection in place more or less makes it impossible to toast a video card or any other parts of a computer with these programs. Meanwhile at a PR level, we believe that NVIDIA is tired of seeing hardware review sites publish numbers showcasing GeForce products drawing exorbitant amounts of power even though these numbers represent non real world scenarios. By throttling FurMark and OCCT like this, we shouldn’t be able to get their cards to pull so much power. We still believe that tools like FurMark and OCCT are excellent load-testing tools for finding a worst case scenario and helping our readers plan system builds with those scenarios in mind, but at the end of the day we can’t argue that this isn’t a logical position for NVIDIA.

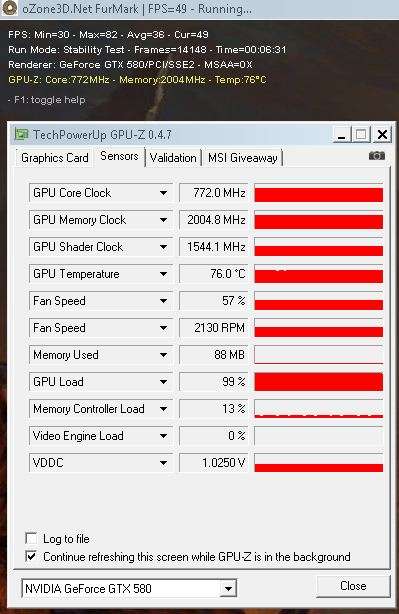

Now something really interesting guys thanks to FudZilla:

GTX 580 and FurMark 1.8.2: the GPU temp does not exceed 76°C

GTX 580 and FurMark 1.6.x: the GPU temp reaches 90°C!!!

My conclusion: I NEED A GTX 580!!!!

3 – Performances

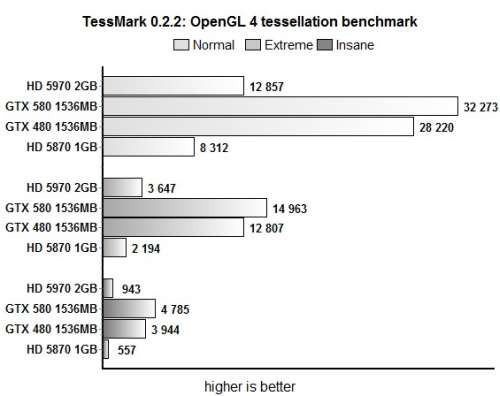

OpenGL 4.0: TessMark

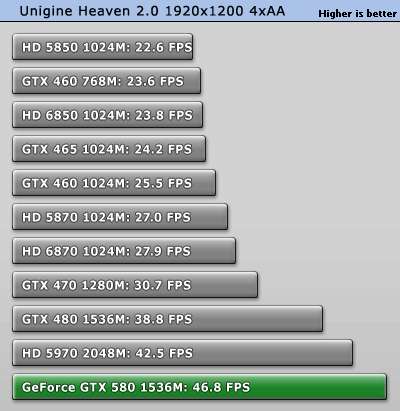

Direct3D 11: Unigine Heaven performances

Ncie test on…. unfinished prototype with beta drivers :p

I would wait the official launch with mature drivers !

I don’t think that proto card you used includes the new vapor chamber though.

Can’t you make your bench less aggressive and not pushing GPU into red RPM speed, that’s the problem.

“GeForce GTX 580 power monitoring” @ customers’ help site:

http://nvidia.custhelp.com/cgi-bin/nvidia.cfg/php/enduser/std_adp.php?p_faqid=2857

@Stefan: Thanks!

Posted: http://www.geeks3d.com/20101109/geforce-gtx-580-power-monitoring-details/

JeGX! you know, you are a horrible, horrible person!!! you are so mean to poor AMD and NVidia! SHAME!!! 😀

@filip007: A benchmark _needs_ to be “aggressive”. That’s the point of the whole thing.

who cares about crappy power virus’s like furmark. They aren’t even indicative of real world stability.

Pingback: [Big Pictures] NVIDIA GTX 580 Alien vs Triangles Tessellation and PhysX Demo Available - 3D Tech News, Pixel Hacking, Data Visualization and 3D Programming - Geeks3D.com

Well what do you expect from a GPU that is more than a year late.

Time to figure out how drivers detect the program!

Other option is do find those chips on the SMBus and disable them….

(I wonder if affects MSI FurMark when the program is locked to low stress mode)

Pingback: EVGA GTX 580, GTX 580 Hydro Copper 2 and Call of Duty: Black Ops Graphics Cards - 3D Tech News, Pixel Hacking, Data Visualization and 3D Programming - Geeks3D.com

Pingback: ShaderToyMark and GeForce GTX 580 - 3D Tech News, Pixel Hacking, Data Visualization and 3D Programming - Geeks3D.com

Pingback: GeForce GTX 580 Unlocked: 350W Under FurMark Thanks To GPU-Z - 3D Tech News, Pixel Hacking, Data Visualization and 3D Programming - Geeks3D.com

Pingback: [GPU Tool] GALAXY MagicPanel HD 3.0.1.5 BETA - 3D Tech News, Pixel Hacking, Data Visualization and 3D Programming - Geeks3D.com

Pingback: [Tested] ASUS ENGTX580 1536MB at Geeks3D Labs - 3D Tech News, Pixel Hacking, Data Visualization and 3D Programming - Geeks3D.com

Pingback: Galaxy Magic Panel HD « Gputoaster's Blog

Pingback: [GPU Tool] EVGA OC Scanner 1.5.0 With GPU Load Limiter - 3D Tech News, Pixel Hacking, Data Visualization and 3D Programming - Geeks3D.com

Pingback: Palit Launches a GTX 580 with 3072MB of Graphics Memory - 3D Tech News, Pixel Hacking, Data Visualization and 3D Programming - Geeks3D.com

Pingback: [Tested and Burned] ASUS GeForce GTX 560 Ti DirectCU II TOP Review - 3D Tech News, Pixel Hacking, Data Visualization and 3D Programming - Geeks3D.com

NVIDIA has a vested interest in ensuring that Furmark doesn’t destroy video cards. It is called Warranty coverage.

If somebody takes their new 580 home from the store and stuffs it in their computer and then fires up FurMark they don’t want the video card to be destroyed by this because then they have a customer saying DOA and a warranty claim being processed.

300W and high hot don’t affect GTX 580 but yes very dust may affect this card. 😉

My GTX 480 was died (freeze without any artifacts; after… this card cannot respond while entering to login Windows) after almost 43 months (3.5 years) old despite 91 ºC in furmark. I have “new” GTX 580 3GB! 🙂

Does the 600 and 700 series have a “anti-furmark feature”?